06 Mar 2019

Understanding the growing importance and meaning of data science

“Data is the new oil” — anonymous

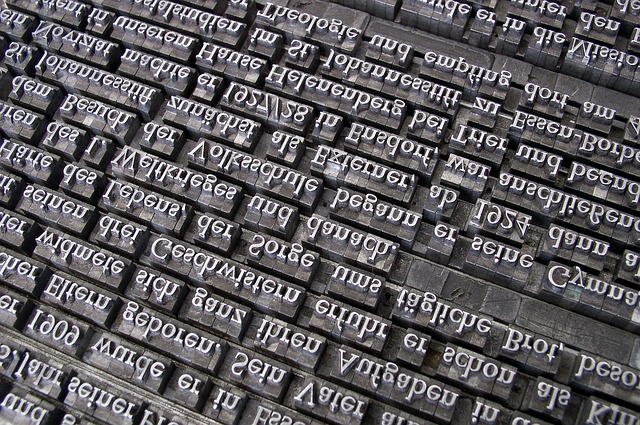

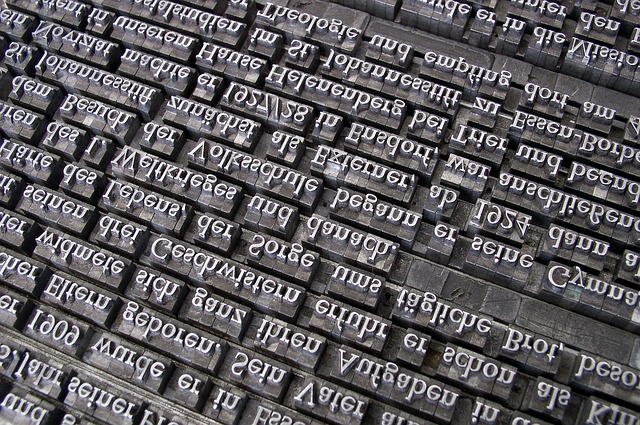

.png) Showing Various Fields.

Showing Various Fields.

Data is everywhere around us – More than 200 million users on twitter, share content, videos and photos every day (Social Media Data). 500 Million smartphones are used for making calls, texts messaging every second (Smartphone User Data, Calls Data and Message Text Data). 10 thousand airplanes are always off the ground in the sky (Aviation Data). More than 10 Million bank transactions are carried out daily (Bank Data).

According to a recent research, IBM suggested that 90 percent of the data in the world has been generated in past three years alone. This data originates from everywhere – social media, website visits, bank transactions, documents and weather sensors to name a few out of a huge list. This data is ubiquitous and cheapest resource available on the earth.

The volume of this increasing data can not be measured and it’s growing every second. This immense amount of data is neither a garbage nor a waste, but it’s a diamond mine for various organizations, industries and communities. This data acts as the fuel for driving the boat of effective decision making !

But “how to use this data?” herein lies the pivotal question. How is this data exploited using technology and algorithms to make sense out of a massive data pile ? There is a single answer to these questions – Data Science.

Data Science is a practice of connecting the dots between world of business and world of data. It is an unconventional art of converting the unstructured data to linked, cleaned and structured sets of productive information and fruitful knowledge. This information in-turn enables effective decision making, smart planning and intelligent applications. The process of data science includes finding the patterns, identifying the insights and prototyping them. The major components which makes it a complete end to end process are:

Getting the data – Data Mining: The first step for any data science application is to obtain right set of data. Data mining include practices for obtaining the data from particular sources and creating the required datasets. Sources of data may vary according to the business to business. Most common areas of data mining are data extraction from open web, data from parsing the documents and files, survey based data collection and data from freely generating data streams.

Fixing the data – Data Cleaning: There is huge amount of data available for use but not every chunk of it is always useful. This step of data standardization includes cleaning of data, handling the noise and converting it to analysis ready dataset.

Analyzing the data – Data Analysis: The most important step of data science is to analyse the data and generate some some outputs. The popular approaches of statistics, machine learning, text mining are performed in this step. This includes: Statistical Measures like Fitting a model, number crunching and regression. Machine Learning techniques like linear classifiers, logistic functions or neural networks. Natural Language Processing practices like text mining, sentiment analysis or entity recognition.

Actionable Insights – Data Prototyping: Data Analysis results in production of actionable insights. These insights are used to make better decisions, development of data applications and data driven. Dashboards, visualizations, reports, sheets and applications are used to get a descriptive view of these insights. The following infographics shows some examples of the real life business problems involving data science and its impact.

Sources: Wiki ,SB.

Thank you for reading my post.. Keep Scrolling

26 Feb 2019

Jekyll.

Jekyll.

What is Jekyll?

Jekyll is a simple, blog-aware, static site generator perfect for personal, project, or organization sites. Think of it like a file-based CMS, without all the complexity. Jekyll takes your content, renders Markdown and Liquid templates, and spits out a complete, static website ready to be served by Apache, Nginx or another web server.

Jekyll is a simple, extendable, static site generator. You give it text written in your favorite markup language and it churns through layouts to create a static website. Throughout that process you can tweak how you want the site URLs to look, what data gets displayed in the layout, and more.

Why Jekyll?

It’s not easy to say but its true

Scroring 100 out of 100 on Google PageSpeedInsights is not easy. But there are ways where you can make sure your site score a perfect century.

Is it easy to learn?

Yes it’s easy is to learn For resources try this Learn more about Jekyll →

Thank you for reading my post.. Keep Scrolling

23 Feb 2019

This is the 2nd and Last post of blog post series ‘Understanding Natural Language Processing’.

About Semantics

Checking (site: pixebay).

Checking (site: pixebay).

In tech terms it is the task of ensuring that the declarations and statements of a program are semantically correct, i.e, that their meaning is clear and consistent with the way in which control structures and data types are supposed to be used.

Associated Major Tasks

Sentiment analysis extract subjective information usually from a set of documents, often using online reviews to determine “polarity” about specific objects. It is especially useful for identifying trends of public opinion in the social media, for the purpose of marketing.

Topic segmentation and recognition given a chunk of text, separate it into segments each of which is devoted to a topic, and identify the topic of the segment.

Natural language generation convert information _from computer databases or semantic intents_into readable human language.

Question answering given a human-language question, determine its answer. Typical questions have a specific right answer (such as “What is the capital of Canada?”), but sometimes open-ended questions are also considered (such as “What is the meaning of life?”).

Relationship extraction given a chunk of text, identify the relationships among named entities (e.g. who is married to whom).

Named entity recognition (NER) given a stream of text, determine which items in the_text map to proper names, such as people or places,_ and what the type of each such name is (e.g. person, location, organization).

About Speech

Different voices (site: pixebay).

Different voices (site: pixebay).

the expression of or the ability to express thoughts and feelings by articulate sounds.

or

a formal address or discourse delivered to an audience.

Associated Tasks

Speech recognition given a sound clip of a person or people speaking, determine the textual representation of the speech. This is the opposite of text to speech and is one of the extremely difficult problems colloquially termed “AI-complete”. In natural speech there are hardly any pauses between successive words, and thus speech segmentation is a necessary subtask of speech recognition (see below). Note also that in most spoken languages, the sounds representing successive letters blend into each other in a process termed coarticulation.

Speech segmentation given a sound clip of a person or people speaking, s_eparate it into words_. A subtask of speech recognition and typically grouped with it.

Text-to-speech given a text, transform those units and produce a spoken representation. Text-to-speech can be used to aid the visually impaired.

Thank you for reading my post.. Keep Scrolling

22 Feb 2019

This is the 1st post of blog post series ‘Understanding Natural Language Processing’.

Dive in NLP

Techincal def: Natural language processing (NLP) is a subfield of computer science, information engineering, and artificial intelligence concerned with the interactions between computers and human (natural) languages, in particular how to program computers to process and analyze large amounts of natural language data

The history of natural language processing generally started in the 1950s, although work can be found from earlier periods. In 1950, Alan Turing published an article titled “Intelligence” which proposed what is now called the Turing test as a criterion of intelligence.

Different languages (site: pixebay).

Different languages (site: pixebay).

Major evaluations and tasks

Though natural language processing tasks are closely intertwined, they are frequently subdivided into categories for convenience in Syntax ,Semantics ,Speech.

site:pixabay.

site:pixabay.

Syntax

In linguistics, syntax is the set of rules, principles, and processes that govern the structure of sentences (sentence structure) in a given language, usually including word order. The term syntax is also used to refer to the study of such principles and processes.

Grammar induction

generate a formal grammar that describes a language’s syntax.

Lemmatization

the task of removing inflectional endings only and to return the base dictionary form of a word which is also known as a lemma.

Part-of-speech tagging

given a sentence, determine the part of speech for each word. Many words, especially common ones, can serve as multiple parts of speech

Parsing

determine the parse tree (grammatical analysis) of a given sentence. The grammar for natural languages is ambiguous and typical sentences have multiple possible analyses. In fact, perhaps surprisingly, for a typical sentence there may be thousands of potential parses (most of which will seem completely nonsensical to a human).

Sentence breaking

given a chunk of text, find the sentence boundaries. Sentence boundaries are often marked by periods or other punctuation marks, but these same characters can serve other purposes

Terminology extraction

the goal of terminology extraction is to automatically extract relevant terms from a given corpus.

2nd Post link

Thank you for reading my post..Keep Scrolling.

.png) Showing Various Fields.

Showing Various Fields.

Jekyll.

Jekyll.

Checking (site: pixebay).

Checking (site: pixebay).

Different voices (site: pixebay).

Different voices (site: pixebay).

Different languages (site: pixebay).

Different languages (site: pixebay).

site:pixabay.

site:pixabay.